No, International Students didn’t Come to Canada to Use Food Banks

Recently I’ve noticed a surge in news articles and social media posts about international students, ranging from claims of their use of food banks as free source of food to accusations of their influence on the worsening housing crisis. While some of these were legitimate concerns, I found the coverage of the issue to be quite shallow, often wrong and failing to point at the root of the issues.

Worse, I started reading many comments online that blame the international students for causing those problems, most of them not justified and sometimes borderline xenophobic (e.g., “they should go back home fast”). I was hoping this to be a temporary phenomenon but unfortunately it instead became a regular sport in many Internet forums, from national news outlets (CBC) to even student subreddits like r/uwaterloo. I’ve seen similar comments on the Korean web, blaming their international students for all kinds of problems.

I found it quite upsetting to browse the web. As someone who started their life as an international student, this is a very important and personal topic for me. I came to Canada from South Korea 18 years ago as an international student. Since graduating from University of Waterloo in 2012, I’ve been working in Toronto, and am now a naturalized Canadian.

Even though I don’t struggle with the same issues as these students anymore, I still remember what it was like to be an international student. Everyone’s ready to take advantage of you, and the system here treats you far worse than other classes of foreigners (e.g., foreign workers and refugees). This is what drove me to speak out on behalf of international students today.

The food bank controversy

There was a news article a couple of months back about how there is a surge of international students overloading the local food banks. The crisis supposedly started with the video that “suggested food banks in Canada could provide visitors with a regular supply of free food”. This overloaded the food banks, and many food banks across the country put a blanket ban on the international students.

The inflation and housing crisis hits us all, and there is no doubt that food banks are also in a tough situation. However, I found this blanket ban on international students not only misdirected but dehumanizing.

First, let’s deal with the abuse of food banks (i.e., using food banks as a regular source of food). Obviously, such abuse is wrong and deserves condemnation. It’s not clear to me, however, if the majority of students were visiting the food banks for this reason. First, the video in question was in the Malayalam language. Let’s put that into perspective. In 2019, Indians made up 34% of all international students and the language is spoken by 3% of Indians (link to wiki). It’s a big stretch to say the International Students all watched this video and started going to food banks.

Further, later in the article states “[…] once students were informed about how food banks work, they were apologetic.” It sounds to me that the small number of people who followed this “advice” were willing to stop their bad behaviour. Anyone can make mistakes and the spread of misinformation can happen in any groups, not just for international students (just watch those TikTok challenges). Admittedly, it was an outrageous video, but it doesn’t mean we should assume that hundreds of thousands of international students are unethical based on this one video. Until they leave Canada, they are just our neighbours with less social support; We should treat them with dignity, and not treat them as “they”.

Second, assuming they are not abusing food banks, the reaction “international students should be responsible for themselves” is unjustified. The unprecedented inflation hit all of us equally hard. In Toronto alone the food bank usage went up 50% year over year (source). A large number of people are suffering, international students or not.

I saw many people online claiming that the international students are set up to be this way because they were only required to bring as little as $10,000/year (as per regulation until last year). This is not quite correct. First, this does not mean most of them actually bring the minimum. Students do research and will try to prepare appropriately for the city they are going to. No one plans to study abroad to live a food-bank lifestyle. Those who can pay the international tuition can certainly lead better lifestyles back home. Most importantly, there was no news article about the previous minimum funding rule causing issues before the pandemic. The pandemic put an unprecedented and unexpected amount of budget pressure on everyone and that includes the international students who were already here. Most international students, just like us, have a set budget, and they will struggle with a sudden inflation. It’s not because international students as a whole are any less responsible than the rest of us, or they deliberately plan to come here with insufficient funding.

It’s ok to debate whether we should make the minimum financial requirement so the students don’t struggle, but we cannot direct the blame to the international students that are already here, that we let in, for not foreseeing the inflation, and seeking help from food banks to meet basic human needs. We don’t rank people in food banks lines by the level of their misery. I don’t see why international students are any special in this regard.

On top of the usual inflation, international students are vulnerable to a unique type of inflation.

Unregulated tuition increases.

When I was studying computer science at UWaterloo, the international tuition for the first year was around $19,000. It was quite expensive, but fortunately my parents could help me at the time. What I found far less thrilling, though, was that my tuition went up to $23,000 per year by the last year of school. That’s an annual increase of 7% and close to $10,000 extra over the course of the undergrad program. Worse, there was no explanation whatsoever from the university that this will happen. I got lucky in that I had a good paying co-op job and was able to absorb the increase.

I thought this was a temporary affair but shockingly, the University continued to hike the international tuition since then at the steady rate of 7% per year, at least for the last 17 years. It’s far more than at the average rate of inflation around 2% per year for the same period.

Now it will cost $310,000 in tuition fees to complete the CS program. To add to this, because the tuition fees have been increasing by 7% a year, the students will need to come up with an extra $30,000 to actually complete the program—something the university does not disclose to students, despite the consistent pattern tuition fee increases for decades. The university should be up-front about the total cost of the program with prospective and current students.

Aside from the ethics or justification of charging international three times more, this is not fair. Since the tuition is growing exponentially, the problem of “surprise” increase of tuition has gotten far worse, dollar amount-wise. $10,000s of unexpected extra cost is a lot. Then add the pandemic inflation on top of it. It’s no wonder so many international students are struggling.

There should be a moratorium put on the current system of unregulated tuition increase. At minimum, the school should be forced to be transparent with students about future fee increases, especially once a student is enrolled. If Rogers increases your phone bill in the middle of your contract, that’s illegal. I don’t see why it’s OK to pull an extra $30,000 from unsuspecting students, just because they are foreigners. I wonder if it is because the university knows the students are in a difficult position to leave after sinking lot of money.

Update (2024-01-29): It looks like B.C. is doing the right thing by having their "post-secondary institutions would be required to clearly communicate full tuition costs to international students for the whole time someone is studying" source. There is no reason Ontario shouldn't do this, too.

International Students aren’t just a source of money.

International students are important for Canada, more than just for money. As long as Canada continues to bring more immigrants, international students make the best candidates. They have our education, speak our language, and they share our values.

Even if they do not end up immigrating to Canada, they are continuing to be important to Canada. They will be generally more friendly to Canada, and some of them will become important Canadians in the future. For example, the first South Korean (https://en.wikipedia.org/wiki/Syngman_Rhee) president went to university in America, and this shaped the future of the relationship between the two countries.

No amount of change in the minimum fund requirement change can cover such a large unchecked tuition increases. It’s good for Canada to educate students from diverse backgrounds with bright futures. I am not so sure about educating just the wealthiest.

Strip-mall Colleges

Another accusation I read is that foreign students are enrolling in diploma mills as an easy way to get permanent residency (PR). Such a take flips the offender-victim relationship, and I wanted to correct this misunderstanding.

First, it’s important to understand that people outside Canada don’t actually know very well about the Canadian school system. Even when all the information is available on the Internet, it’s difficult to know where to look, if you are not from Canada (I have not heard of UWaterloo before coming to Canada). That is why there is a government sanctioned list (known as designated learning institutions or DLIs) that lists what schools can host international students. It’s supposed to be a stamp of approval such that we don’t issue study permits to random institutions or admit low quality candidates. The entire immigration system around international students their pathways hinges on this list not being useless (e.g., we issue open work permits to new graduates, and award extra points for permanent residency).

Unfortunately, it doesn’t seem like the DLI list is are maintained effectively, if at all. And now we just have a cottage industry of basically ripping off foreigners (example 1, example 2), with the our own government providing some legitimacy to this by issuing a permit for them to enter the country.

Does going to these colleges help students get the Canadian PR easily? Not really. The new system in place installed by the Harper government in 2015 (called “express entry”) lines up potential immigrants against each other and pick the highest scoring one every month. It’s quite a cut-throat system, if you ask me. If what people say about the problematic colleges are true (i.e., doesn’t set you up for a job), then there is basically no chance the students will be able to get a PR here. Sure, they will get an open work permit after graduation – with no health benefits - but that will not lead to any meaningful future in Canada (no, working for UberEATS will not get you a PR). When their open work permit expires, they will have to leave the country.

It is not good for Canada, either. The chances are these students will go back home and recount negative experiences about Canada back home. The only winners are the shareholders of the colleges. It would have been much better for both Canada and the international students if the government does its job and not let this happen at all.

In short, the international students coming to Canada to attend low quality colleges are just getting screwed. They are not going to steal your jobs. They are victims in shitty situations. We should be questioning instead why our system allows this to happen.

But what about Conestoga college, which is public?

Even legitimate schools smelled money and they are getting on the train. As mentioned earlier, there is no regulation for what the college can charge international students or how much they can be increased, so it makes a lucrative funding source. In fact, as of recent, international students fund our post-secondary education, not our province (example 1, example 2).

Conestoga college is an egregious example of this. They hosted 10,000 students in total in 2012. In 2022, the college hosted 30,000 international students. Of course, the quality of teaching will degrade significantly. It is simply not possible to scale the quality of teaching that fast.

It's a dangerous game to play for the college. On one hand, the college is incentivized to do this to get more funding since the public schools are not allowed to increase tuition for Canadian students and the provincial government is not interested in increasing funding for education. International students are an attractive way out of this. On the other hand, this will damage reputation for the institution and reputation for Canada. Sadly, however, the decision makers do not care much about the consequences because it’s “good business”. Things will not change without public pressure, or appropriate media coverage.

Housing Problems

The entire Waterloo region is estimated to have 648,000 people. If you rapidly increase the population by 5% (30,000 people) over a short period of time, the system will experience problems. It doesn’t matter what the source of that additional population is (e.g., Canadians, foreigners, etc.).

What angers me greatly is that this is where you will hear people blaming or mocking the international students (for rooming with multiple people), rather than pointing out the issue with the international student system. It’s OK to talk about reducing the number of study permits, or other ways to solve the housing problems. It is not fair to criticize or ostracize those who are already here for taking our houses away. It’s not OK to treat people poorly because they are international students.

We let them in. All students have done was to pay a lot of money to study here. If anything, they are quite screwed. We should take responsibility.

Ask questions:

- Where did so much tuition go? 30,000 students just in one year would have paid half a billion dollars of tuition. For example, TCHC (https://www.torontohousing.ca/) could build a thousand units with that. Could some of it have been used to build more student housing instead?

- Why are the federal and provincial governments not doing anything about it? Is it because the victims of the housing crisis are the economically disadvantaged (powerless) and international students (even more powerless)?

Why did I decide to write this?

Being an international student in Canada isn’t easy. They actually bring a lot of money to Canada, yet surpisingly some people continue to think international student aren’t contributing economically (example), or worse, accused of being a leech. Aside from the absurdity of trying to rank people by their “contribution to Canada”, would the same people advocate that the poorer students to pay more into the education system? I don’t think this is what Canada stands for.

Still, let’s make an economic comparison just for the sake of that argument. One could invest (not spending, they are still making money!) around $300,000 - equivalent to the CS program tuition today - in Canada and become a permanent resident with all the associated benefits. Clearly, this is a “good enough contribution” to our country to live here freely and enjoy all the benefits. International students who spend that much money outright in our economy, ought to be treated better than how they are treated today.

If the students had time, it would have been more advantageous to take “invest to get PR” path and then enroll in school, but they are young and they can’t afford to do that.

I believe the reason the international students are treated so poorly, is because international students have very little power in our society. Contrary to the stereotype, most of them don’t have an infinite pool of money, or all drive a BMW. They have schoolwork to keep up with and have spent all their savings on their education already. They don’t have stable status or support network in Canada. You are not eligible for most scholarships. No one powerful understands their experience or speaks for them, even when it is completely off base. They become the punching bag.

I was quite disappointed how these issues were portrayed in the news and social media. I kept seeing unfiltered and frankly dangerous opinions without any explanation or counterpoints. They did not ask further probing questions. When you don’t ask the right questions, instinct takes over. It quickly becomes us vs them.

I also noticed discussions around the international students became very one-dimensional: Are they contributing or leeching off our society? I wanted to add more colour to this discussion.

I enjoyed my time as an international student. I learned a lot in school, made lifelong friendships and am living comfortably in Canada. At the same time, the reading the recent controversies dug up a lot of bad memories – why is the tuition going up every year? Why is it so difficult to get medical care as an international student? I paid a lot of federal and provincial taxes as a student. Why am I not getting any benefits?

I am not an international student anymore, but I did not forget my past and I wanted to do my part to help. I wanted to tell you a different perspective, the one I think is underrepresented.

If you read this and found it insightful, please share with your friends or your MP and MPPs.

OS Detecting QMK keyboard

What bits are exchanged when you plug in a USB keyboard? Can you detect the OS with those bits?

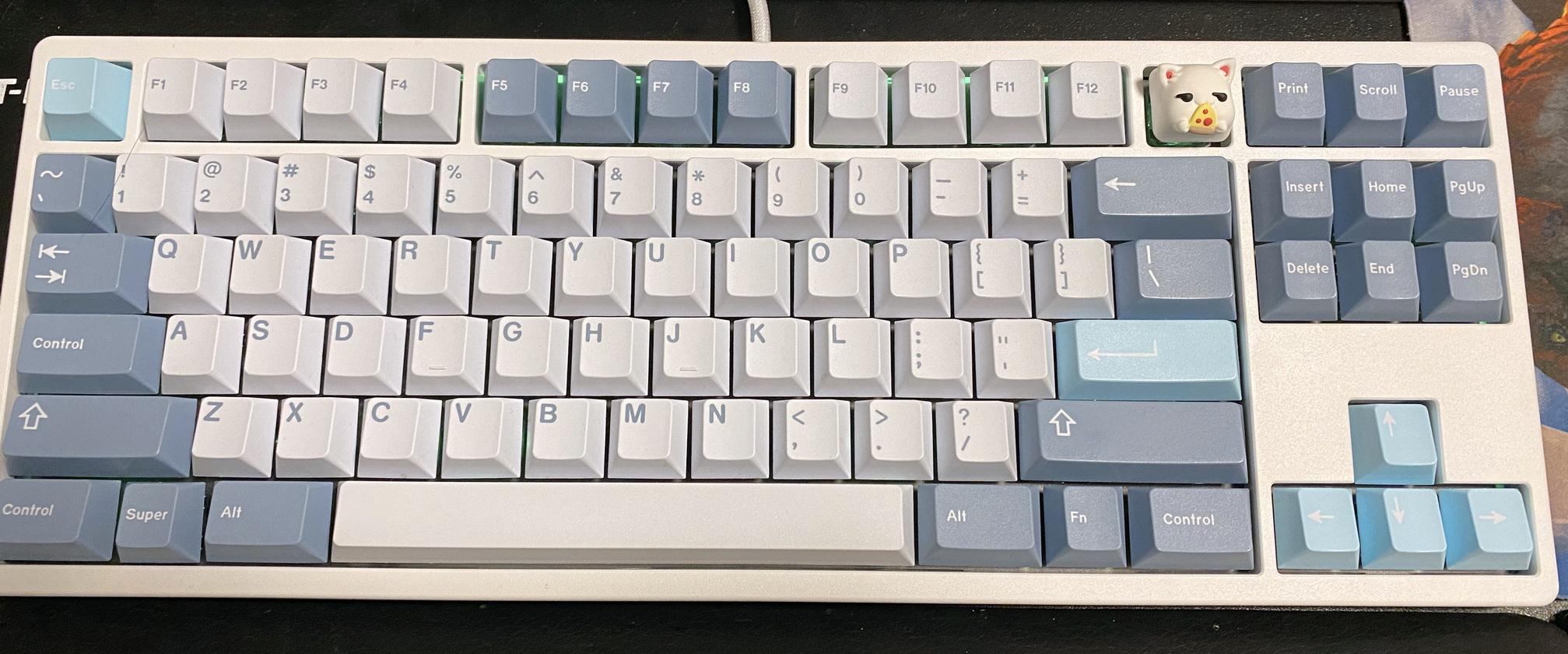

Recently, I got a mechanical keyboard named KBDfans Tiger Lite as a gift (Thanks Rose!). The keyboard runs the QMK (Quantum Mechanical Keyboard) keyboard firmware, which is open source and allows easy custom modification of the keyboard. I was pretty excited to get my hands on it, since I've been wanting to be able to customize my keyboard firmware.

There were a few tweaks I wanted to make directly on the keyboard that were either impossible or hard to do reliably otherwise:

- Consistent Media key layout across computers.

- On Windows, AutoHotkey can't intercept keystrokes for Admin applications without also itself running as Admin, which I didn't want to do.

- On Mac:

- I want to use a single modifier key (i.e., Right Alt) to switch between languages. MacOS doesn't allow a single-modifier shortcuts, and the Mac Fn key, which can switch languages, is non-trivial to simulate.

- I found the MacOS emoji shortcut ^⌘+Space is legitimately hard to enter for me, compared to the Windows equivalent ⊞+..

- I wanted the keyboard to automatically switch to the MacOS-friendly layout when I switch from my personal PC to my work laptop, and vice versa.

The first three items were fairly simple to do with QMK. However, the last item, OS detection, proved to be non-trivial. QMK doesn't have such a feature built-in, and the USB devices don't get much information about its host (i.e., PC) at all.

I was able to put together something that works over the holidays, and I wanted to share the details of how it works.

Prior Work

The idea was first described in an Arduino project called FingerprintUSBHost, and Ruslan Sayfutdinov (KapJI) implemented a working version for QMK. Without the existing examples, I wouldn't have been able to come up with this idea.

Just Merge it!

After merging KapJI's change, the code mostly worked, but it was missing one major feature for me: when I switch between the PC and the Mac, the OS detection stopped working after the first device.

After reading the OS-detection code by KapJI, I understood that there is a function get_usb_descriptor to return whatever USB descriptor type the host requests, and that their os detection code records the frequency of the value of the wLength field for "String-type descriptors". I had a vague understanding of what a "usb descriptor" might be (that it's related to the USB device initialization) but wasn't sure how it works. I understood that this counter has to be reset but wasn't sure where to do it. There was documentation for the feature but, it did not explain to me why this works, or what the meaning of those fields were. I decided to dig deeper since I was having a slow week.

How does the OS detection work at all?

After some Googling, I found an amazing tutorial on USB called USB in NutShell by Craig Peacock at beyondlogic.org. Here's the summary of I learned about USB that is relevant:

- Every USB device goes through the setup process. As part of the setup process, the host requests for a bunch of descriptors to learn about the devices. "Descriptors" are generic structures used to describe a USB device. There are many subtypes of descriptors.

- A USB device is described by one Device descriptor:

- A Device descriptor contains Configuration descriptors (but usually there is only one).

- A Configuration descriptor contains Interface descriptors.

- An Interface descriptor corresponds to what we end-users think of as an actual "device" in the OS. An Interface descriptor contains Endpoint descriptors.

- Endpoint descriptors describe the communication pipes for their interfaces, which is how the interface actually exchange bits and bytes with the host.

- Descriptors can refer to each other. For example, a Device descriptor has its name and its manufacturer, but they are not included in the Device descriptor itself. Rather, the Device descriptor makes a reference to a String descriptor by its index, which contains the actual String data.

- For String Descriptors,

wLengthfield refers to the maximum size of the string that the host is willing to accept.

As an example this was the list of all of its descriptors for my keyboard.

Putting it All Together

With this background, I was finally able to understand how the OS-detection works:

- When the keyboard is plugged in, the host asks for the device descriptor and its subparts.

- For this particular keyboard, there are 3 String descriptors of interest:

0x00: List of supported languages for all String descriptors. For QMK, it's hard-coded as US English0x01: This is the name of the manufacturer of the keyboard0x02: This is the name of the product itself (i.e., keyboard)

One interesting quirk that makes the OS-detection work is that the real-world OSes request the same String descriptor multiple times with different wLength, which specifies the maximum size of the String that the host is willing to accept.

For example, Windows asks for the same product string with wLength of 4, 16 then 256 and twice each (Full sequence here). MacOS is slightly different.

So why does this particular call pattern occur? My guess is that this behaviour exists to work around defective USB devices. For Linux, I was able to find its source for querying String descriptors. First thing to note is that the behaviour that the OS-detection code looks for is consistent with the source code that we see. Linux asks, right away, for strings with wLength 255 (0xff) and never again if the device is well-behaved. Second, Below the initial String query, you can also see that Linux has different workarounds for defective devices, which didn't kick in for my keyboard.

While I can't read the source for Windows or MacOS, but based on the Linux code, it seems likely that the other OSes also have similar workarounds for different USB devices. Lucky for me, these wLength patterns occur consistently enough for the OS-detection code to work reliably.

When to reset the OS detection data?

Now that I know how the OS detection works, I still had to find out where to reset the OS detection counter, since the original code didn't have any call to clear them.

My first attempt was to just delete the counter when the host asks for the Device descriptor, since that is the top-most logical object of the device. Unfortunately, this didn't work for a couple of reasons. First, there is no guarantee that the Device descriptor is only queried once and in fact the supported language String descriptor (index 0) can be logically queried even before the Device descriptor itself, since it's independent of the Device descriptor.

After going through the QMK source code, I found that there is an undocumented hook named notify_usb_device_state_change_user, which gets called on USB reset, which happens when the KVM switches between the host devices.

The original code also doesn't specify how long I need to wait after the USB-reset before executing the OS-detection (it just says "probably hundreds of milliseconds") but by now I knew exactly how long:

A USB compliant Host expects all requests to be processed within a maximum period of 5 seconds

Based on my observation, however, 1 second was plenty, so that's what I settled with, and now I have a cool, one-of-a-kind keyboard 😁

Use apt-get build-dep to install build dependencies

Or, how to build the latest tmux on Ubuntu

There are times you want to use a more up-to-date software than what comes with your Linux Distribution (distro). I had to do this when I was using Ubuntu 16.04. I wanted to use the latest tmux for its advanced mouse support but Ubuntu 16.04 came with an older version of tmux without the feature. Since the LTS versions rarely do a major-version upgrade, the only way to get the newer version was to build it from source.

Sometimes, building from source can be as easy as ./configure && make && sudo make install. More often than not though, it can be a real test of patience. What I do usually is to run the build script, read the error message, search the name of the missing component, rinse and repeat.

Here's an example of me trying to build tmux from scratch on Ubuntu (⛔ indicates a trial-and-error cycle):

# Get Git

$ sudo apt-get install -y git

# Get the source

$ git clone https://github.com/tmux/tmux.git; cd tmux

# Well, this is the only executable, so let's go.

$ sh autogen.sh

...

aclocal: not found ⛔

# after googling

$ sudo apt install -y automake

$ sh autogen.sh # ✅ works this time.

$ ./configure

...

configure: error: no acceptable C compiler found in $PATH ⛔

# I guess I should install gcc, since that's a popular compiler.

$ sudo apt install -y gcc

$ ./configure

...

configure: error: "libevent not found" ⛔

# Let's install the development package

$ sudo apt install -y libevent-dev

$ ./configure

...

configure: error: "curses not found" ⛔

# I just happen to know that the package is not called libcurses-dev 😏

$ apt install -y ncurses-dev

$ ./configure

...

config.status: error: Something went wrong bootstrapping makefile fragments

for automatic dependency tracking. If GNU make was not used, consider

re-running the configure script with MAKE="gmake" (or whatever is

necessary) ... ⛔

# What is totally not obvious is from the message above is that

# I am actually missing make entirely.

$ sudo apt install -y make

$ ./configure # succeeds ✅

$ make

...

./etc/ylwrap: line 175: yacc: command not found ⛔

# Googles to find out that GNU bison provides yacc

$ sudo apt install -y bison

$ make

# Finally succeeds ✅

This was just 6 tries, but with bigger packages, this trial-and-error approach can be extremely time consuming. Worse, even after building, you can still end up with random missing features because some of the optional features are included only when the library is found on the system.

Fortunately, on a Debian-based distro (like Ubuntu), there is a quick way to install the build dependencies for the software that already exists in the repo. This relatively obscure command apt-get build-dep will install all the build dependencies for you.

Additional Notes for Ubuntu

On Ubuntu, the first step to use apt-get build-dep is to add the source code repositories to the system source repositories. Source repositories contain the metadata necessary to find out the build-time dependencies for packages. We need to do this on Ubuntu once because recent versions of Ubuntu ships with the source repositories excluded by default. Presumably, this is to make apt-get update faster but I was never able to confirm. If anyone knows the answer, please let me know.

To enable the source code repositories, you can uncomment the lines starting with deb-src, or run the following command:

# The old sources.list will be backed up as /etc/apt/sources.list.bak

$ sudo sed -i.bak 's/^# *deb-src/deb-src/g' /etc/apt/sources.list && \

sudo apt-get update

# restore with sudo mv /etc/apt/sources.list.bak /etc/apt/sources.list

Real-life Example: Building tmux

With the source repository enabled, let's try building tmux again.

Install the build dependencies of tmux.

$ sudo apt-get build-dep -y tmux

Now we can start building.

cd tmux

sh autogen.sh && ./configure && make -j 4

For the latest tmux on Ubuntu 20.04, you will get an error like this (as of Feb 2022):

./etc/ylwrap: line 175: yacc: command not found

This is because this new version of tmux added a dependency to yacc. I confirmed this by reading the CHANGES file.

We can fix this by installing the yacc-compatible GNU bison: sudo apt-get install -y bison. Re-running the build should work.

If everything went well, you should be able to run ./tmux to test it.

In the end, I still had to go through 1 trial-and-error cycle (yacc) but that's 5 less that the original.

Bonus 1: Safe Installation of Compiled Packages

Here's one more tip to manage the installation of source-compiled packages. Many guides at this point would tell you to run sudo make install but often there is a nasty surprise waiting for you after - You are at the mercy of package maintainer to be able to uninstall the package cleanly. Often the uninstall target just doesn't exist, in which case you can't easily uninstall the software. There are a couple ways to handle this (e.g., GNU stow - a topic for another blog). Here I will show how you can use checkinstall to easily create a reasonably well-behaved dpkg from the source. To use checkinstall, run the following command:

$ sudo apt-get install -y checkinstall

Now you can run checkinstall to create a dpkg (Debian package) and install it.

It will ask you a bunch of questions, you can go with the default most of the time. As for the version string I just used 9999 (a convention that I borrowed from Gentoo) to express that I want my package to be the latest version.

# make a package - may have to answer some prompts

$ checkinstall --pkgname=tmux --pkgversion=9999 \

--install=no --fstrans=yes

# install the package (replace amd64 with your architecture)

$ sudo dpkg -i tmux_9999-1_amd64.deb

Now you can see that your tmux is installed as /usr/local/bin/tmux (Technically it's in a wrong place for a dpkg package but it's not that important).

Now if you try to install tmux from apt, it will say you have the latest version.

$ sudo apt-get install -y tmux

Reading package lists... Done

Building dependency tree

Reading state information... Done

tmux is already the newest version (9999-1).

# And to uninstall this package,

# you can simply run apt-get remove -y tmux

Bonus 2: What if I just want to find the list of dependencies without installing them?

Found this answer on askubuntu.com:

# replace tmux with the package in question

apt-cache showsrc tmux | grep ^Build-Depends

Bonus 3: What if I am on Redhat/Fedora?

Use dnf builddep or yum-builddep.

Dirt-cheap Serverless Flask hosting on AWS

Update 2023-01-30: Updated the code to work with CDK 2.0

Today I want to tell you how you can host a dynamic Flask application for cheap on AWS using Serverless technologies. If you are interested in hosting a low-traffic Flask app that can scale easily, and that can be deployed with a single command for almost free, you will find this blog helpful. If you are not interested in reading why I started on this journey, feel free to skip to the overview section.

Table of Contents:

My Low-Cost Hosting Journey

As a hobbyist programmer, one of the things I've spent a lot of time thinking about is how to host a dynamic HTTPS website (including this blog) as cheaply and easily as possible. The cheaply part refers to the literal dollar cost. I wanted to spend as little as possible and not be wasteful with what I am paying for. The easily part refers to the ease of development and deployment, like being able to stand up a new website with a single command. My programmer instinct told me to do as little manual work as possible.

You might ask, why not just host a static website? I just found being able to host dynamic service code very cool, and it requires less thinking, so that's what I am focusing on here.

A Cheap VM (2015)

The first obvious choice for me was to use a cheap VM. You can get a cheap instance that can host a Flask website for less than $10/month. This is probably the popular method today due to its low barrier to entry - This is how everyone do their web programming locally, after all. There is no shortage of documentation on how to set up NGINX with your Flask application. It involves clicking a few times to get a new VM, then sshing into the instance, and then installing your software.

However, I grew pretty unsatisfied with the setup over time:

- Setting up the instance was time consuming and tedious. I tried using solutions like Ansible to automate the setup within the instance but it took a long time to test and get it right. There were many manual steps. For example, the DNS entry for the website was outside the setup script. All these manual steps had to be documented, or else I would just forget about them and would have no idea how to bring the website up again.

- It also takes a lot of effort to set up an instance that is "prod"-ready. "Production" concerns include things like rotating logs so that it doesn't fill up your disk, updating software so you don't end up running a botnet. Reading the access logs taught me that the Internet is a fairly dangerous place - you get a ton of random break-in attempts (mainly targeting PHP message boards, but there are others too).

- Since the setup was complicated, testing my change in a prod-like setting was out of question. So I just tested in prod.

- Setting up HTTPS took way more effort than I imagined. Even after letsencrypt came out, it was quite a bit of hassle to make sure certificate renewal works correctly and that the cert is not lost over instance loss. I could have slapped an ELB in front to get the certificate from AWS, but that cost $15/month so I decided not to use that.

- It was wasteful. The resource utilization was very low (single digit % CPU utilization) most of the time, which meant most of the money I paid for the instance was basically thrown away. Even the smallest unit of instances proved to be too coarse for my purpose.

- At the same time, the top end of the scaling limit was quite low. At most, the VM was able to handle a few dozen requests simultaneously. On the other hand, I couldn't find a way to make it possible to scale up, without forking at least $20 a month.

- It was really easy to lose data. So instead, I used the free tier RDS instance for a year, but it started charging $10+/month after that (I eventually moved data to DynamoDB to save cost, at the expense of re-writing some code).

ECS (early 2018)

My next attempt was to use Elastic Container Service (ECS). For those who don't know, ECS is a container orchestration engine from AWS. This was before Kubernetes became dominant like today.

Dockerizing the application meant that I was at least able to launch the instance from the ground up easily, and that if the instance is down, ECS will start it back up. I still had to setup the whole NGINX + uWSGI + Flask combo since ECS doesn't help me with that. It solved some problems but it was not any cheaper or that much simpler. It was still up to me to make sure the instances are up to date.

Adding CloudFormation to the mix (late 2018)

By the end of 2018, I've caught up with the whole Infrastructure-as-Code (IaC) thing, so I decided to migrate my ECR setup to a CloudFormation template. In case you are not familiar with it, CloudFormation (CFN) is an Infrastructure-as-Code (IaC) solution to deploy infrastructure easily. Simply put, IaC allows you to deploy your entire infrastructure like code. IaC lets you manage your infrastructure like code so you can version control it, easily rollback, and deploy your infrastructure with a single command.

This setup worked, and I was even able to make a very basic form of zero-downtime deployment work, by deploying another stack and swapping the Elastic IP between two instances. That was done outside CFN but it worked well enough. Deploying a whole new server with just a command was a cool achievement so I was proud of that.

However, it did take many, many days to get the template right. The CloudFormation template had almost no type checking. It wasn't easy to find out which fields were mandatory or not, other than by consulting the scattered documentation (it has one web page per data type... really?). The whole "edit-compile-test" iteration time was long. It took minutes for CloudFormation to tell me something was wrong, and then it took many more minutes for it to get back to the state where I could try another change.

The final CFN template was definitely something that I did not want to touch ever again, once it was working. There was also no cost-savings still.

Trying out Zappa (2020)

AWS Lambda came out in 2014, and popularized so-called "serverless" computing, also often called function-as-a-service. I'd explain Lambda like this: Lambda lets you run a function, rather than the whole operating system. A JSON event goes in, and your code runs based on that. You can call it however often as you'd like because scaling is handled by Lambda. Lambda bills for the usage in millisecond precision. If you don't use it, you don't pay for it. If you use it for a second a month, you pay for the second, not anything more than that. It's hard for me to explain how revolutionary this is - every single highlighted issue is a hard problem.

A minor bonus for hobbyists like us is that Lambda's free tier lasts forever unlike EC2 and in my opinion, pretty generous. You can host a low-traffic website for close to free, forever.

When I first heard about Lambda, I thought it would be perfect for me but I was worried about a few things: Cold-start time sounded scary, and it wasn't very obvious to me how to migrate an existing app, and the local testing story was not there, so I didn't think to use it.

Eventually in 2020, I gave it another look when I started hearing more about the benefits of Lambda and how more mature the ecosystem around it is.

My first attempt was using Zappa. It was pleasantly simple to to use and it did convince me that Lambda was way to go. However, it became apparent to me soon that it wasn't for me. Zappa was quite opaque in its operation and it didn't look like there was any integration point or an escape hatch into the rest of the CloudFormation ecosystem.

For example, I wanted to attach a CDN in front such that I can cache contents any way I want. It was not possible to do this with Zappa. Even today, the main page suggests to use something else like S3 to serve contents in conjunction with Zappa for hosting static contents.

It seemed that I had a fundamental disagreement with the project's architecture and direction. I believed this unnecessarily complicated the local testing story. I didn't agree that Flask-generated text content are somehow any less cachable. And I still don't think it's any less "serverless" to serve binary with Flask when the CDN is in front.

In summary, Zappa convinced me to go serverless but ironically, I wasn't convinced Zappa was the way to go, so I kept searching.

AWS SAM (2020)

AWS Serverless Application Model (SAM) is a tool around CloudFormation to make it easier to develop serverless Applications.

SAM does including, but not limited to the following:

- Various CloudFormation transformations that make common serverless application definitions simpler.

- Other helpers to make deployment of Lambda bundles easier for common languages (e.g., Python).

- Harness to test and execute Lambda functions locally. It will essentially parse out the CloudFormation template to setup a local Lambda environment that's similar enough.

Since the infrastructure layer is thin, I was able to setup the infrastructure around my Lambda, exactly the way I wanted. The cold start time was not bad at all - it was at worst a second which was acceptable in my opinion (tons of websites perform much poorer). Since there was a CDN in front, the cold start delays were not perceptible most of the time.

I was very pleased with this setup. It was pretty close to the Holy Grail of easy & cheap hosting. Local testing story was acceptable. Excluding the cost of a Route 53 Hosted Zone ($0.50/month), I started paying way less than a dollar per month. A single command deployment was now possible, and there was no disruption to the service.

There were things that I was still unsatisfied with this setup. Mainly, working with CloudFormation was still a big pain. I started using CFN professionally and I still didn't like it. In addition, I didn't see SAM adopted widely, so it wasn't so easy to Google problems with using it. In other words, it was not something I'd recommend to a friend, unless they were willing to spend a lot of time going through the same pain I went through with multiple days of trial-and-error around the infrastructure.

Meet CDK (2021)

Finally, this year I gave CDK a try this year, and I was immediately sold on it. Cloud Development Kit (CDK) improves the CloudFormation experience significantly. It makes CloudFormation so much better that I would always recommend using CDK to define infrastructure, no matter how small your project is.

CDK is essentially a typed CloudFormation template generator. CDK supports writing the infrastructure definition in TypeScript (among many other languages, but please, just use TypeScript - it's not so hard to pick up). That means you get all the usual benefits of typed languages like earlier validation of errors, auto-complete and navigation support in IDEs like Visual Studio Code out of the box. It still generates (synthesis in the CDK parlance) a CloudFormation template at the end of the day so you will have to know a little bit about how CloudFormation works but that's not hard.

Migrating from the raw CloudFormation template was fairly simple because CDK can even import your CloudFormation template into a CDK app. After importing it, it was just a matter of moving one construct at a time to CDK. Unlike in CloudFormation template, referring to an existing resource in CDK was also fairly trivial. It took me less than a day to migrate the whole thing.

This was it, I finally had something good - something I can recommend to a friend. In fact, this blog you are reading is hosted using this exact setup.

SAM still had a place in the CDK world because it can be used to emulate Lambda locally based on the CDK-generated CFN template, if necessary. However, I rarely ended up using it once I got the infrastructure setup such that the local execution environment matches the remote environment.

Overview of the Holy Grail

For the rest of the blog, I want to explain how the Holy Grail is put together. I made a runnable starter kit available on GitHub so you can clone and host your own Serverless Flask on AWS easily. I'll include links to code in the post so you can refer back to the actual code.

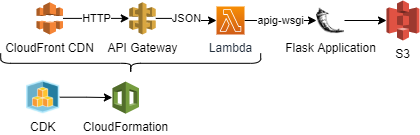

We are going to use the all the components discussed previously: CDK, CloudFormation, Lambda, API Gateway, CloudFront CDN and S3. Here's a diagram of how they relate to each other.

Let's start from Lambda, since that's where the code runs.

Lambda

Defining Lambda in CDK is pretty straightforward. The following sample shows how it can be done:

let webappLambda = new lambda.Function(this, "ServerlessFlaskLambda", {

functionName: `serverless-flask-lambda-${stageName}`,

code: lambda.Code.fromAsset(__dirname + "/../build-python/",),

runtime: lambda.Runtime.PYTHON_3_9,

handler: "serverless_flask.lambda.lambda_handler",

role: lambdaRole,

timeout: Duration.seconds(30),

memorySize: 256,

environment: {"JSON_CONFIG_OVERRIDE": JSON.stringify(lambdaEnv)},

// default is infinite, and you probably don't want it

logRetention: logs.RetentionDays.SIX_MONTHS,

});

(link to code in the starter kit)

By using lambda.Code.fromAsset, you can just dump your self-contained Python environment and let CDK upload it to S3 and link it to Lambda automagically.

There are two more main problems we need to tackle before we can actually host a Flask app. First, Lambda doesn't speak HTTP so something else needs to convert HTTP into a JSON event. Second, since Flask app doesn't speak the JSON object, somebody also needs to translate the JSON event into something Flask understands. Using API Gateway and apig-wsgi, I was able to solve both problems nicely.

API Gateway

API Gateway is a fairly complex product. I, myself, am not sure how to explain it. In any case, API Gateway is frequently used to give Lambda an HTTP interface, so the CDK module for API Gateway already provides a construct called LambdaRestApi. The following is all you need to define the API Gateway for the Lambda:

let restApi = new agw.LambdaRestApi(this, "FlaskLambdaRestApi", {

restApiName: `serverless-flask-api-${stageName}`,

handler: webappLambda, // this is the lambda object defined

binaryMediaTypes: ["*/*"],

deployOptions: {

throttlingBurstLimit: MAX_RPS_BUCKET_SIZE,

throttlingRateLimit: MAX_RPS

}

});

(link to code in the starter kit)

The binaryMediaTypes is set to all types such that it simplifies handling of all content types.

throttlingBurstLimit and throttlingRateLimit are one of the simplest ways I've seen to apply a token-bucket style throttling to your web app. It also serves as a control to protect yourself from an unwanted billing disasters.

apig-wsgi

The LambdaRestApi object from the previous section takes a HTTP request and hands it over to the Lambda. But Flask doesn't understand this particular format, which speaks Web Server Gateway Interface (WSGI) only. Fortunately, there is a Python library named apig-wsgi that can convert the API Gateway-format into WSGI and vice versa. The library is very simple to use, you simply need to wrap the Flask app with it. In the following code, create_app is the function that creates your Flask app.

from apig_wsgi import make_lambda_handler

from serverless_flask import create_app

inner_handler = make_lambda_handler(app, binary_support=True)

def lambda_handler(event, context):

return inner_handler(event, context)

(link to code in the starter kit)

CloudFront

I suggest fronting the API with CloudFront, which is a Content Distribution Network (CDN) service by AWS. It has two main purposes. First, when you create an API Gateway-backed API, your application root is always prefixed by the stage name (e.g., /prod). CloudFront can re-write the URL to provide a clean URL (/my-url to /prod/my-url). Second, it can improve your application performance by being closer to your users and caching responses. The following code snippet assembles a simple CloudFront CDN. This is by far the longest CDK snippet:

let cdn = new cloudfront.Distribution(this, "CDN", {

defaultBehavior: {

functionAssociations: [{

eventType: cloudfront.FunctionEventType.VIEWER_REQUEST,

function: new cloudfront.Function(this, "RewriteCdnHost", {

functionName: `${this.account}RewriteCdnHostFunction${stageName}`,

// documentation: https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/functions-event-structure.html#functions-event-structure-example

code: cloudfront.FunctionCode.fromInline(`

function handler(event) {

var req = event.request;

if (req.headers['host']) {

req.headers['x-forwarded-host'] = {

value: req.headers['host'].value

};

}

return req;

}

`)

})

}],

origin: new origins.HttpOrigin(restApiUrl, {

originPath: "/prod",

protocolPolicy: cloudfront.OriginProtocolPolicy.HTTPS_ONLY,

connectionAttempts: 3,

connectionTimeout: Duration.seconds(10),

httpsPort: 443,

}),

smoothStreaming: false,

viewerProtocolPolicy: cloudfront.ViewerProtocolPolicy.REDIRECT_TO_HTTPS,

cachedMethods: cloudfront.CachedMethods.CACHE_GET_HEAD_OPTIONS,

allowedMethods: cloudfront.AllowedMethods.ALLOW_ALL,

compress: true,

cachePolicy: new cloudfront.CachePolicy(this, 'DefaultCachePolicy', {

// need to be overriden because the names are not automatically randomized across stages

cachePolicyName: `CachePolicy-${stageName}`,

headerBehavior: cloudfront.OriginRequestHeaderBehavior.allowList("x-forwarded-host"),

// allow Flask session variable

cookieBehavior: cloudfront.CacheCookieBehavior.allowList("session"),

queryStringBehavior: cloudfront.CacheQueryStringBehavior.all(),

maxTtl: Duration.hours(1),

defaultTtl: Duration.minutes(5),

enableAcceptEncodingGzip: true,

enableAcceptEncodingBrotli: true

}),

},

priceClass: cloudfront.PriceClass.PRICE_CLASS_200,

enabled: true,

httpVersion: cloudfront.HttpVersion.HTTP2,

});

new CfnOutput(this, "CDNDomain", {

value: cdn.distributionDomainName

});

(link to code in the starter kit)

Most of the configuration is self-explanatory, but there are a few things that need explanation: domain re-writing and cache control.

Domain re-writing

Domain re-writing is implemented so that Flask can know to which domain it's serving the content. This is important if your Flask app needs to know its own domain for things like sub-domain support and absolute URL generation. If you are hosting Flask in a more traditional architecture, this is not an issue but in this case, we are going through CloudFront and API Gateway so it's a bit more involved.

CloudFront is capable of passing the Host header but this is not possible when using API Gateway in the middle because API Gateway uses the Host header to distinguish its clients. (Googling this suggests this to be a common problem).

If you simply pass through the Host header, you will get a mysterious 403 error from API Gateway (most like this is because they use SNI to differentiate different originating domains).

Fortunately, we can use a super cool feature named CloudFront Functions to solve this problem. CloudFront Functions lets you give the CDN a JavaScript function which can modify the request and response objects at will, so long as they finish in a millisecond. In our setup, the function code will rename the original Host header into x-forwarded-host. We also need to allow the specific header to be forwarded.

Since the Flask application doesn't really know about the x-forwarded-host, we need to re-write the header once more to restore the Host header:

def lambda_handler(event, context):

app.logger.debug(event)

headers = event['headers']

cf_host = headers.pop("X-Forwarded-Host", None)

if cf_host:

app.config["SERVER_NAME"] = cf_host

# patch host header

headers['Host'] = cf_host

event['multiValueHeaders']['Host'] = [cf_host]

app.logger.info(f"Host header is successfully patched to {cf_host}")

return inner_handler(event, context)

(link to code in the starter kit)

Note that the HTTP header casing is inconsistent - CloudFront only accepts lower-case HTTP header names in the configuration but API Gateway turns them all into Camel-Kebab-Case headers.

After this, the Flask application will work pretty seamlessly with respect to the Host header.

Cache Control

The sample CDN configuration caches responses for 5 minutes by default, up to an hour. This is a sensible default for a mostly static website, but there are times when you don't want the response to be cacheable.

Since CloudFront CDN simply follows the HTTP cache directives, you can use the same mechanism to prevent caching of resources.

from flask import make_response

import time

@app.route("/example_json_api")

def example_json_api():

resp = make_response({"body": "ok", "time": round(time.time())})

resp.headers["Content-Type"] = "application/json"

return resp

@app.route("/example_json_api_no_cache")

def example_json_api_no_cache():

resp = make_response({"body": "ok", "time": round(time.time())})

resp.headers["Content-Type"] = "application/json"

resp.headers["Cache-Control"] = "no-store, max-age=0"

return resp

You can observe that the first resource is cached for 5 minutes whereas the second resource is always fetched from the source by examining the time field.

The current configuration passes through a cookie named session because that's what's used by Flask to store session data. This effectively disables caching if you start using session (e.g., for logged in users). For a more robust control (such as always caching images regardless of cookies), you will want to create new CloudFront behaviours based on the URL.

Session Handling

This section is only relevant if you are planning to use the Flask session.

Session refers to the state that gets persisted across HTTP requests that the client cannot tamper with. For example, one of the ways to implement the "logged in" state is to use a session variable to indicate the current user name. A typical way this is implemented is by storing the session in a database.

It is possible to also implement the session without a database if you utilize cryptography (with a different set of trade-offs). This is the approach Flask takes by default (Flask quick start section on session). What is relevant in our context is that, you need to securely store the secret key backing the session encryption. If you were to re-generate the secret key every time, the session would not work.

In my setup, I decided to use S3 to store the key. You could use Secret Manager but it is totally not aligned with our goal of minimizing cost.

Here's how to define the S3 bucket in CDK:

let appStore = new s3.Bucket(this, "S3Storage", {

blockPublicAccess: BlockPublicAccess.BLOCK_ALL,

removalPolicy:RemovalPolicy.RETAIN,

encryption: BucketEncryption.S3_MANAGED,

bucketName: `${this.account}-serverlessflask-s3storage-${stageName}`

});

// grant permissions to the Lambda IAM Role

appStore.grantReadWrite(lambdaRole);

(link to code in the starter kit)

In the code, I opted to simply create a new secret key, if it does not exist - the code is not free of race-condition but it's good enough for our purposes.

Incident Response Plan - if the key ever gets compromised, you can just delete the S3 object and you will get a new key.

Wrapping Up

Feel free to try this yourself with the runnable example code in my serverless-flask-on-aws Github repo. I tried making the sample as realistic as possible - it has simple unit tests samples along with a very detailed documentations on how to run it.

If you found this helpful, please share with your friends using the permalink. Feel free to tweet at me or email me, if you have any comments.

Cost (Update 2021-12-30)

Only_As_I_Fall on Reddit asked how much this costs. This was my answer:

Since this is a mostly static website, I'll assume there aren't that many hits all the way to Lambda - which means the CDN is the dominating factor (Lambda+API Gateway would not cost much). As of now, loading the main page (with 5 latest article) costs about 120kiB per page load, but after compression it's 50KiB. Let's assume it's all not cached. So 1GiB gives me 20,000 hits. I opted for the "price class 200" which can be up to $0.120/GiB.

CloudFront now gives 1TiB for free, so it's free up to 20 million hits per month or 7.9 requests per second flat (as a comparison, reddit gets just 80x more than that). After that, it's about $6 per a million visits.

ddb-local - Python wrapper for DynamoDBLocal

While working on a Python project, I wanted to write some tests that interact with Amazon DynamoDB.

After a bit of searching, I found that there is an official Local version of DynamoDB. This is cool, I thought. Reading the instruction made me realize, though, that none of the options suit my use case particularly well.

The docker version was technically "standalone" but it was not something I can integrate into a unit test harness easily. The Maven version was the closest to what I was looking for but this was not usable for a Python application.

Finally, the tarball version looked promising but it still had a number of annoyances: First, it had to be downloaded and installed somewhere. And then you'd need to start the database process as part of your test and terminate it properly when your test is done.

What I really wanted was to be able to write something like this:

import pytest

from ddb_local import LocalDynamoDB

# Creates a throw-away database

@pytest.fixture

def local_ddb():

with LocalDynamoDB() as local_ddb:

yield local_ddb

# Write a test using the temporary database

def test_with_database(local_ddb):

ddb = boto3.resource("dynamodb",

endpoint_url=local_ddb.endpoint)

# do something with ddb

I couldn't find anything that resembles this, so I decided to roll up my sleeves and write it myself. It took me about a day but I was able to write something presentable. I gave it a very logical name, too: ddb-local.

The library does everything I want - it handles the database installation, and it gives a Python-friendly interface.

Prerequisite

One thing you will have to do is to install Java yourself. This is because installing Java is simple for the end users but not for the library.

For example, on Ubuntu 20.04, you can run this command to install the latest Java:

sudo apt install -y openjdk-17-jdk

Using it in your Python code

To start using it, you can run one of the following commands, depending on your needs:

# Install globally (not recommended), or install inside a virtualenv.

pip install ddb-local

# Install for your user only.

pip install --user ddb-local

# Using pipenv, install as a dev dependency.

pipenv install -d ddb-local

The library handles the installation of the latest Local DynamoDB binary for you. It will also manage the process for you. You can simply use the context manager idiom (i.e., with LocalDynamoDB as ddb) to start the database, and to ensure it shuts down when you are done with it.

Usage Examples

pytest

Pytest is a popular testing framework in Python (it's also my favorite framework). Since this was my main motivation, the code I wanted to write works as-is 😉

import pytest

from ddb_local import LocalDynamoDB

# Creates a throw-away database

@pytest.fixture

def local_ddb():

with LocalDynamoDB() as local_ddb:

yield local_ddb

# Write a test using the temporary database

def test_with_database(local_ddb):

ddb = boto3.resource("dynamodb",

endpoint_url=local_ddb.endpoint)

# do something with ddb

Basic Persistent Database

import boto3

from ddb_local import LocalDynamoDB

with LocalDynamoDB() as local_ddb:

# pass the endpoint.

ddb = boto3.client('dynamodb', endpoint_url=local_ddb.endpoint)

Without a Context Manager

If you can't use it with a context manager, you can also call start() and stop() manually. In this case, it's your responsibility to make sure stop() is called.

from ddb_local import LocalDynamoDB

db = LocalDynamoDB()

db.start()

print(f"Endpoint is at {db.endpoint}")

db.stop()

Other Escape Hatches

I am a big believer in providing escape hatches in libraries and this library is no exception.

Here are some of the constructor options that also serve as "escape hatches":

extra_args: If you'd like to specify an option supported by DynamoDBLocal that is not supported by the library, you can pass it using this argument.unpack_dir: If you'd like to provide your own tarball, you can install the DynamoDBLocal yourself, and the just point to the root of the directory.debug: Pass through the output from the underlying process.port: Use a different port than the default port.

Wrap-up

You can find the source code at Github. It's licensed in MIT, so you can use it for whatever purpose you want. It would be nice if you let me know if you found it useful 😀